Apache Kafka Security Models Rundown

A rundown on how security is handled in Apache Kafka deployments.

Introduction

Apache Kafka security refers to the measures available to you and your organization to safeguard your data from unauthorized access, ensure the privacy and integrity of your messages, and maintain the overall trustworthiness of your Kafka infrastructure. These measures cut across data encryption, authentication, and authorization categories.

Apache Kafka serves as a central hub for data within organizations, encompassing data from various departments and applications. By default, Kafka operates in a permissive manner, allowing unrestricted access between brokers and external services. However, this openness can pose significant risks, especially when handling sensitive data or requiring controlled access. To address these concerns, it's crucial to implement robust security measures to ensure a more secure Kafka deployment.

Why Do You Need Apache Kafka Security?

Apache Kafka is often used to handle critical and sensitive data, such as financial transactions, personal information, or proprietary business data. Many industries, such as finance, healthcare, and government, have specific regulations and compliance requirements related to data security and privacy.

Securing your Apache Kafka deployment ensures that only authenticated and authorized users or applications can access and interact with the system, your organization complies with regulatory rules, and you are preventing unauthorized parties from manipulating or intercepting the data.

When it comes to ensuring security in Apache Kafka, there are three fundamental considerations that need to be addressed: data encryption, authentication, and authorization. These aspects collectively contribute to a robust security framework for your Kafka deployments:

- Data encryption: Implementing encryption measures both at rest and in transit is essential for protecting sensitive data within Kafka. Data encryption at rest involves safeguarding the data when it is stored on disk or in storage systems. On the other hand, data encryption in flight focuses on securing your data as it is being transmitted between Kafka components or across the network. Encryption prevents unauthorized parties from eavesdropping on sensitive information and protects against data tampering.

- Authentication: Authentication plays a pivotal role in ensuring the security of your Kafka resources. By implementing robust user and client authentication mechanisms, your organization can verify the identities of individuals or applications before granting access to Kafka clusters. This crucial step helps mitigate the risk of unauthorized data access and potential system misuse. Authentication also adds an additional layer of accountability to your Kafka ecosystem. By associating actions and operations with specific authenticated identities, you can track and audit the activities within your Kafka deployment. This enhances transparency and enables forensic analysis in the event of any security incidents or policy violations.

- Authorization: Once users and clients are authenticated, it's necessary to enforce authorization policies to regulate their access privileges within Kafka. Authorization controls the specific actions users or applications can perform on your Kafka resources, such as reading from or writing to topics, managing consumer groups, or performing administrative tasks.

By implementing data encryption, authentication, and authorization measures, you can significantly enhance the security of your Kafka deployments. These security measures mitigate the risks associated with unauthorized access, data breaches, and privacy violations. However, you must strike a balance between security and performance, as some security mechanisms can add additional computational overhead or administrative complexities to your deployments.

Apache Kafka Security Models

Apache Kafka supports several security models or protocols that can be configured to enhance the security of your Kafka deployments. Below are the main security models for Kafka, along with their benefits and potential drawbacks.

Encryption

By default, Apache Kafka transmits messages in an unencrypted form known as "plaintext." This lack of encryption leaves your Kafka messages vulnerable to eavesdropping, data tampering, and unauthorized access. Plaintext's lack of security features means it's only really suitable for non-sensitive environments or development or testing purposes.

You can implement encryption measures such as Secure Sockets Layer/Transport Layer Security (SSL/TLS) encryption to enhance the security of the data in your Kafka deployment. SSL/TLS encryption is a widely used security protocol that provides data encryption, authentication, and integrity for network communication.

Apache Kafka facilitates communication both between brokers and clients (producers and consumers) and among brokers within the same cluster, forming a robust network for seamless data exchange and collaboration.

With SSL/TLS encryption, you can enable encryption in transit, with all traffic between clients and the Kafka cluster, as well as between the Kafka brokers themselves, being encrypted. This is especially important when clients access the cluster through unsecured networks like the public internet. This ensures that data transmitted between clients and the cluster remains protected from eavesdroppers or unauthorized modifications. Additionally, broker–broker traffic and broker–Zookeeper traffic is crucial, even within private networks, to safeguard data while it is in motion.

By using self-signed certificates (primarily for internal environments) or certificates signed by a certificate authority (recommended for production environments), you can establish SSL/TLS encryption for Kafka communication. In addition to brokers providing certificates to clients, you can also configure clients to provide certificates to brokers, creating two-way encryption known as mutual TLS (mTLS).

In addition to encrypting data in transit, you should also consider securing data at rest, as Kafka persists data by writing it to disk. Apache Kafka does not provide built-in support for encrypting data at rest. Therefore, you need to rely on disk- or volume-level encryption mechanisms provided by your infrastructure. Public cloud providers typically offer options for encrypting storage volumes, such as encrypting Amazon EBS volumes with keys from AWS Key Management Service.

By implementing both data-in-transit encryption and data-at-rest encryption, you significantly enhance the security of your Apache Kafka deployment, protecting your data from interception, tampering, and unauthorized access. It's important to note that enabling encryption can introduce a performance impact due to the CPU overhead required for encryption and decryption processes.

Authentication

There are two mechanisms for authentication available with Apache Kafka: Secure Sockets Layer (SSL) and Simple Authentication and Service Layer (SASL).

SSL authentication is based on the SSL/TLS protocol, which provides encryption, integrity, and authentication for data transmission. It leverages certificates to establish trust between your Kafka clients and brokers.

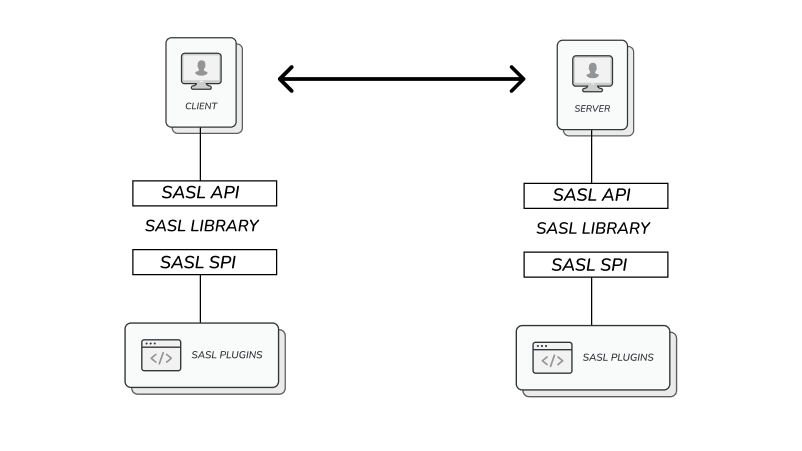

SASL is a pluggable authentication and data security framework that separates the authentication mechanism from the underlying Kafka protocol. It allows Kafka to support various authentication methods beyond SSL.

In the SASL authentication architecture, client and server applications interact with the underlying authentication mechanisms through a well-defined process. This process is facilitated by the SASL API, which acts as an interface.

While SSL can be used in conjunction with SASL to provide encryption and authentication together, SASL offers more options for fine-grained authentication control. Kafka supports various SASL authentication options, as outlined below.

SASL/PLAIN

This method involves classic username/password authentication. Usernames and passwords must be stored on Kafka brokers in advance, and any changes require a rolling restart. While straightforward, this method is not recommended for robust security. If SASL/PLAIN is used, enabling SSL encryption is crucial to prevent credentials from being sent as plaintext over your network.

This method of combining SASL authentication with SSL encryption is called SASL/PLAIN. Apache Kafka supports a default implementation of SASL/PLAIN that you can extend for use in production.

SASL/SCRAM

SASL/SCRAM (Salted Challenge Response Authentication Mechanism) combines a username/password combination with a salted challenge requiring both the encrypted password and the certified identity of the client to authenticate access, providing a more secure option.

Usernames and password hashes are stored in ZooKeeper, and it's best suited for Kafka deployments where your ZooKeeper is on a secure network. This storage option enables scalable security without requiring broker restarts. Like with SASL/PLAIN, enabling SSL/TLS encryption is necessary to protect SCRAM exchanges during transmission. This helps safeguard against dictionary or brute-force attacks and impersonation if ZooKeeper security is compromised.

Kafka supports two variants, SCRAM-SHA-256 and SCRAM-SHA-512, which differ in the hash functions they use and the length of the hash output. SCRAM-SHA-512 offers a higher level of security at the cost of increased computational requirements. The choice between the two depends on the specific security needs and performance considerations of your Kafka deployment.

SASL/GSSAPI (Kerberos)

The Generic Security Services API (GSSAPI), by itself, does not provide any security or authentication. However, the GSSAPI is widely used as a means to implement other security mechanisms, such as Kerberos. The Kerberos protocol uses strong cryptography so that a client can prove its identity to a server (and vice versa) across an insecure network connection.

Kafka supports SASL/Kerberos, which uses the Kerberos ticket mechanism known for its robust authentication capabilities. It's commonly implemented in environments using Microsoft Active Directory. SASL/GSSAPI is well-suited for large enterprises, enabling centralized security management from within their Kerberos server. SSL encryption is optional in SASL/GSSAPI, but it's recommended for enhanced security. Setting up Kafka with Kerberos requires more complexity but offers significant security benefits.

SASL/OAUTHBEARER

SASL/OAUTHBEARER uses OAuth 2.0 for authentication and authorization. The OAuth 2.0 authorization framework provides a mechanism for a third-party application to obtain restricted access to an HTTP service, acquiring access to resources either by facilitating an approval process between the resource owner and the service or by obtaining access independently.

The SASL/OAUTHBEARER mechanism extends the use of the OAuth 2 framework beyond HTTP contexts, allowing its application in non-HTTP scenarios. It enables clients to authenticate and obtain access tokens using OAuth 2.0 flows, facilitating secure integration with external identity providers.

Using OAUTHBEARER in Kafka provides several benefits. It allows for centralized authentication and authorization management through external identity providers, reducing the need to manage user credentials within Kafka itself. It also enables seamless integration with existing authentication systems and supports fine-grained access control based on the scopes and permissions granted by the external identity provider.

However, when using OAUTHBEARER in Kafka, careful consideration must be given to security aspects. The default implementation of OAUTHBEARER in Kafka is not recommended for production deployments as it creates and validates unsecured JSON Web Tokens (JWTs). As such, when used in production environments, it should be paired with signature verification and encryption to prevent tampering.

Authorization

Authorization in Apache Kafka is responsible for managing the permissions and privileges granted to users or applications, dictating their actions and access rights within the Kafka system. It encompasses control over topics, partitions, consumer groups, and administrative tasks.

To facilitate authorization, Apache Kafka provides two common approaches: access control lists (ACLs) and role-based access control (RBAC).

Access Control Lists

ACLs provide a way to define fine-grained access control policies for resources in Kafka, limiting access to specific resources and operations to authorized users. By default, Kafka utilizes Apache ZooKeeper as the storage mechanism for ACLs and provides a flexible and extensible authorizer implementation that can be easily integrated.

ACLs can be broken down into two key components: the resource pattern that defines the Kafka resource being accessed and a rule that specifies the user or client and the permissions being granted (such as read, write, create, or delete). For example, an ACL granting read and write topic permissions to a user named sampleuser could read like this:

principal: "User: sampleuser"

resource: Topic "my-topic"

operation: Read, Write

It's crucial to properly configure and set ACLs to ensure appropriate access control. Without ACLs, resource access is limited to superusers when an authorizer is configured. In the absence of associated ACLs, access to a resource is restricted to superusers only.

Role-Based Access Control

Configuring and managing fine-grained authorization policies through ACLs can be complex, particularly in large and dynamic environments. Therefore, Kafka also supports role-based access control (RBAC), which is an effective approach to managing system access by assigning roles to users within an organization. RBAC operates on the concept of predefined roles, each equipped with a specific set of privileges known as role bindings.

With RBAC, administrators can define roles such as "Producer," "Consumer," or "Administrator," each having a predefined set of permissions. These roles can be granted to users or groups of users, known as principals. The role bindings determine the access rights for each principal.

Roles encompass a collection of permissions that can be associated with a resource, enabling the execution of role-specific actions on that particular resource. When binding a resource to a role, it's necessary to grant the role to a principal, ensuring the authorized access and utilization of the associated privileges. RBAC offers several benefits for your Kafka deployment, including centralized authentication and authorization, granular access control, and easier, more scalable authorization management.

Kafka Vendors and Their Security Features

In the managed Kafka industry, different cloud and data organizations provide managed Kafka services with diverse security models. The following are some of the most popular security options available in this sector:

- Confluent: Confluent offers Confluent Cloud, a fully managed Kafka service that provides a scalable and secure platform for running Kafka clusters in the cloud. It includes various security features such as encryption at rest and in transit, built-in compliance, RBAC, and integration with authentication and authorization providers.

- Amazon Web Services (AWS): AWS provides Amazon Managed Streaming for Apache Kafka (Amazon MSK), which is a fully managed service for running Apache Kafka on AWS. It simplifies the setup, management, and operation of Kafka clusters. AWS offers security features like Virtual Private Cloud (VPC) support, encryption at rest and in transit, and integration with AWS Identity and Access Management (IAM) for access control.

- Red Hat: Red Hat offers Red Hat AMQ Streams, a project built on open-source technologies. It provides enterprise-grade features such as secure communication using TLS encryption; authentication using certificates, TLS, SASL, or OAuth; and integration with Red Hat Single Sign-On for centralized access control.

- Aiven: Aiven is a cloud-native platform that offers managed Kafka services along with other open-source data technologies. Aiven provides robust security measures such as end-to-end encryption, access control using ACLs, and integration with external authentication providers like Google Cloud IAM or LDAP.

- Google Cloud Platform (GCP): GCP provides a managed Kafka service called Google Cloud Pub/Sub, which offers similar messaging capabilities as Kafka with additional features. It ensures security through encryption at rest and in transit as well as IAM for access control. It also integrates with other GCP security services, like Identity-Aware Proxy (IAP).

- IBM Cloud: IBM offers IBM Event Streams, a fully managed Kafka service on the IBM Cloud platform. It provides robust security features such as TLS encryption for data in transit, authentication using API keys, and integration with IBM Cloud Identity and Access Management (IAM) for access control.

- Azure Event Hubs: Microsoft Azure offers Azure Event Hubs, a scalable and fully managed event streaming platform. While not strictly Kafka, it provides similar functionality and integrates well with existing Kafka applications. Azure Event Hubs offers security features like TLS encryption, Azure Active Directory integration for authentication, and RBAC for access control.

- Heroku: Heroku, a cloud platform as a service (PaaS), provides Heroku Kafka, a fully managed Kafka service that allows developers to build scalable and secure Kafka-based applications. It offers features like encryption at rest and in transit, role-based access control, and integration with Heroku Private Spaces for network isolation.

The security features of each vendor are summarized in the following table:

Conclusion

In this article, you explored the various security models available in Apache Kafka, such as SSL/TLS for encryption, SASL for authentication, and fine-grained authorization using ACLs. You gained insights into the security capabilities provided by industry-leading managed Kafka platforms such as Confluent Cloud, Amazon MSK, and Red Hat.

To enhance the security and resilience of your Kafka environment, consider integrating Aklivity's Zilla into your infrastructure. Aklivity is pioneering API infrastructure for streaming data, and it offers a powerful, open-source, streaming-native API gateway called Zilla.

With Zilla, you can interface web apps, IoT clients, and microservices to Apache Kafka via declaratively defined, stateless API endpoints. Aklivity's solution empowers you to make any application or service event-driven, leveraging the full potential of streaming data. Try out Zilla with your Kafka vendor of choice.

Let’s Get Started!

Reach out for a free trial license or request a demo with one of our data management experts.