Bring your own REST APIs for Apache Kafka

Zilla enables application-specific REST APIs. See how it's not just another Kafka-REST proxy.

Introduction

The Apache Kafka open source message streaming platform is widely adopted as the core of many modern event-driven application architectures.

Use cases include: publish and subscribe messaging, website activity tracking, log or metrics aggregation, stream processing, and more.

Although languages running directly on the Java VM, such as Java and Scala, have fully supported client libraries provided by the Apache Kafka project, other language client libraries are maintained separately by independent projects in the Kafka ecosystem.

The Kafka client APIs and network protocol are complex, so it is challenging for other languages to reach the same level of maturity, even for the baseline capabilities. As new features and functionality are added to the official client libraries, the other languages often do not keep up with the same pace of development.

These challenges have given rise to projects such as the Confluent REST Proxy, Karapace REST Proxy and Nakadi Event Broker. In each case, a system level REST API is provided by the project, allowing clients to interact with Kafka topics using standard HTTP clients and without the need for a native Kafka client library.

For example, publishing a message to Kafka via REST is handled by each of the projects in a similar way.

Topic-Centric REST APIs

The Confluent REST Proxy or Karapace REST Proxy lets you publish records (messages) to the test1 topic in Kafka as follows.

This REST API design clearly exposes the system level concepts of Apache Kafka, such as topics, records, keys and values.

Event-Centric REST APIs

The Nakadi Event Broker makes an improvement by abstracting away the details of Apache Kafka topics and allowing events to be published to a Kafka topic.

This REST API design takes an event-centric view, allowing clients to avoid being exposed to the low level implementation details of Kafka topic names, etc.

Application-Centric REST APIs

While publishing messages to a Kafka topic via HTTP is certainly useful, this only covers one-way event publishing. The HTTP response is returned immediately, indicating that the message was successfully published, but there is no opportunity for a microservice to first process the message and influence the HTTP response.

How can we provide an application-centric REST API that unlocks Kafka event-driven architectures and is also suitable for consumption by web and mobile clients, say to create a new Task for a Todo Application?

An application-centric REST API for Kafka gives the developer freedom to define their own HTTP mapping to Kafka, with control over the topic, message key, message headers, message value and reply-to topic.

Event-driven microservices need support for correlated request-response over Kafka topics to provide an on-ramp from web and mobile REST APIs to event-driven architecture.

This greatly simplifies interactions between all event-driven microservices attached to Kafka, whether or not they are accessed via REST APIs.

REST APIs over Kafka using Zilla

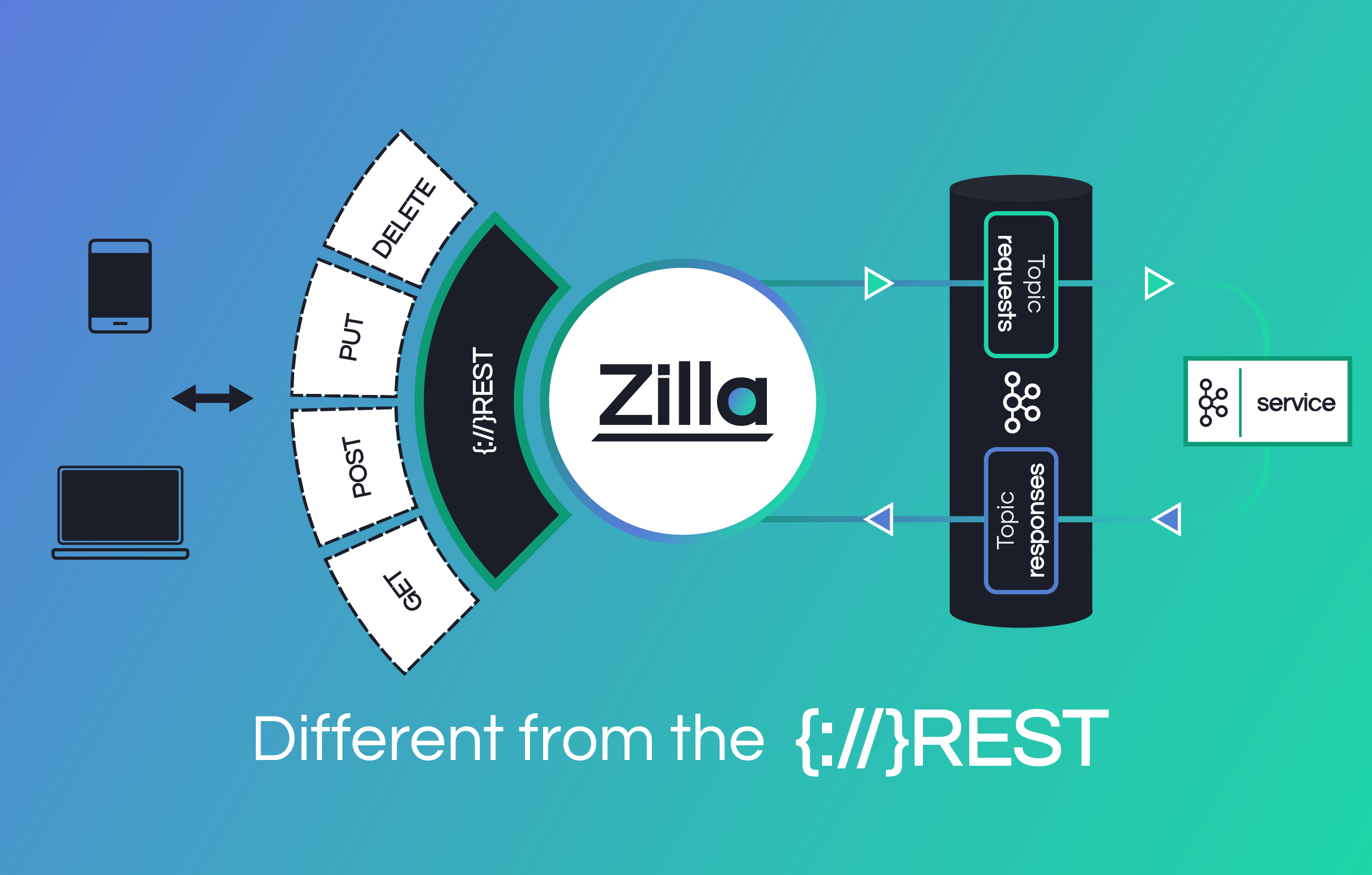

The Zilla open source project provides a code-free way to map REST APIs to Kafka based event-driven microservices, as shown below.

Zilla is an open source API gateway for connecting web and mobile applications to event-driven microservices using standard protocols, such as HTTP, Server-Sent Events and Kafka.

Zilla is designed on the fundamental principle that every data flow is a stream, and that streams can be composed together to create efficient protocol transformation pipelines. This concept of a stream holds at both the network level for communication protocols and also at the application level for data processing.

When the POST request is received by Zilla, a message is produced to the requests topic, with HTTP headers delivered as the Kafka message headers and the HTTP payload delivered as the Kafka message value.

When the correlated response arrives at Zilla, the HTTP response is returned to the client, with Kafka message headers delivered as HTTP response headers.

Idempotent Requests

Thanks to the persistence provided by Kafka, the correlated response in the responses topic can still be observed for the repeated request, letting the client receive the previously completed response immediately. The event-driven microservice can also detect the duplicated request and avoid re-processing as the previous response is already available.

Asynchronous Request-Response

When request processing is longer running, or the result of a request needs to be provided to another process, then supporting asynchronous request response over HTTP is needed.

First the client request is made, indicating a preference for an asynchronous response.

Then the 202 Accepted response is received, indicating the location to check for the completed response.

Making a request to the provided location returns the actual response to the original request.

The Kafka event-driven microservice does not require any changes to support asynchronous request-response via Zilla.

Conclusion

Event-driven architecture brings many benefits to the design, development and deployment of microservices, yet integration of request-response style web and mobile clients is still evolving.

Solutions available today typically provide gateway-specific REST APIs, in terms of Kafka topics and other system-related concepts, exposing internal implementation details to HTTP clients.

By contrast, the Zilla open source project can integrate application-specific REST APIs with Apache Kafka to bridge the gap between request-response and event-driven without compromises, leading to a greatly simplified architectural deployment and fewer moving parts.

Learn more about Zilla with our Get Started guide to Build a Todo Application.

Let’s Get Started!

Reach out for a free trial license or request a demo with one of our data management experts.