Rethinking Kafka Migration in the Age of Data Products

Kafka vendor migration has long been treated as an unavoidable disruption. Whether driven by cost, performance, cloud strategy, or vendor risk, migrations typically require months of planning, coordinated client upgrades, and operational risk across dozens or hundreds of teams.

TL;DR

The problem: Kafka migrations are painful because applications are tightly coupled to Kafka vendors, protocols, and schemas.

Every backend change forces coordinated client upgrades, creates risk, and slows teams down.

The solution: Data products introduce a stable abstraction layer that decouples producers, consumers, and AI agents from Kafka infrastructure.

Zilla Platform operationalizes this model by exposing Kafka streams as governed, API-first data products and isolating vendor-specific behavior behind stable contracts.

Kafka backends can be added, migrated, or replaced with no client changes, no downtime, and no coordinated cutovers.

We’re opening early access for partners and technology leaders to experience the platform firsthand and help shape what comes next.

Request Access to the Zilla Data Platform

Right Migration Strategy?

Before exploring how data products solve vendor migration challenges, let’s examine why traditional Kafka migration strategies struggle.

Big Bang Cutover

In a big bang cutover, the entire platform (all producers and consumers) transitions from the old Kafka backend to the new in one coordinated moment.

While conceptually simple, this approach rarely succeeds at scale. It requires synchronous upgrades of every consumer and producer, risking broad outages and complex rollback logic when even a single system fails post-cutover.

Dual Write and Dual Read

Dual write and dual read mitigate risk by running both source and target Kafka clusters in parallel.

Producers simultaneously write to both systems, and consumers adapt to read from either. However, this duplicates infrastructure, adds runtime complexity to client logic, and introduces consistency challenges as ordering or offsets diverge.

Client-by-Client Migration

Incremental client migration seems safer, but it exposes the core coupling problem: applications are tightly tied to Kafka clients, protocols, and semantics.

Migrating each client requires heavy lifting, including library upgrades, regression testing, and deployment coordination, stretching timelines over quarters and consuming engineering cycles.

The Core Migration Problem

All of these strategies fail to address the root issue: Applications are directly coupled to Kafka infrastructure.

Clients understand brokers, protocols, client libraries, partition semantics, and vendor-specific behaviors. As long as applications interface directly with Kafka, vendor migration is visible, disruptive, and risky.

The fundamental problem is not Kafka itself. It’s the absence of a stable abstraction boundary between applications (including modern, event-driven consumers) and the underlying streaming system.

Data Products Change the Migration Model

Data products provide that abstraction. They represent streams as versioned, schema-governed products with stable contracts. Applications interact with these products rather than raw Kafka topics. Kafka (and any alternative vendor) becomes an internal implementation detail hidden behind the data product layer.

Data products enforce:

- Contract stability: schemas and backward compatibility guarantees

- Governance: ownership, access control, and change policies

- Multiple consumption modalities: APIs, event subscriptions, historical queries

This separation transforms migration from a cross-team coordination nightmare into a platform-centric implementation exercise.

Decoupling Applications from Kafka Vendors with Data Products

The moment clients consume through data products, three major coupling vectors are removed.

Connectivity Decoupling

Applications no longer connect directly to Kafka brokers or clusters. Networking, security, routing, and load balancing terminate at the platform boundary.

During a vendor migration, the platform absorbs connectivity changes. Clients continue using the same endpoints exposed by the data product.

From Language-Specific Clients to API-First Access

Direct Kafka access couples applications to language-specific client libraries with uneven maturity, performance, and upgrade cycles.

Data products invert this model. Access becomes API-first and language-agnostic.

Protocol translation and client compatibility are handled by the platform, not application teams. Kafka’s wire protocol no longer dictates application behavior or upgrade timelines.

Schema and Contract Decoupling

Data products formalize schemas as first-class contracts. They provide:

- Versioned schema evolution

- Explicit compatibility rules

- Governed change pipelines

Without a product boundary, topic schemas evolve organically, often breaking clients or requiring brittle compatibility hacks. With data products, schema governance becomes enforced and predictable.

Data & AI Governance

This decoupling becomes even more critical as AI-driven consumers enter the system.

AI agents reason over streaming data, correlate real-time signals with historical context, and trigger actions autonomously.

Data products ensure that both traditional services and autonomous agents always receive the data they expect, even as infrastructure evolves underneath.

Event-Driven Agents Will Define the Future of AI

AI agents transform Kafka from a passive messaging layer into an activation layer for intelligent systems.

Instead of client processes polling or batching data, AI agents are triggered by events, assess context, and act continuously in real time.

Event-driven architectures enable:

- Fanout to multiple independent agents acting on the same stream

- Partitioning for scalable workload distribution

- Stream processing for enriched contextual outputs

- Schema registry for enforced communication contracts

These capabilities unlock real-time AI systems that can reason, adapt, and act autonomously, rather than waiting for batch jobs or synchronous API calls.

Migration Becomes a Platform Responsibility

When data products sit between clients and Kafka, migration stops being an application problem and becomes a pure platform responsibility.

This is exactly where the Zilla Platform fits.

Zilla Platform provides a data plane that implements data products as stable, API-first interfaces while isolating applications from Kafka vendors, clusters, and protocols. Producers, & consumers..

Under this model, a Kafka vendor does not require:

- Client library upgrades

- Application redeployments

- Dual write or dual read logic

- Coordinated cutovers across teams

Instead, the platform team migrates the backend Kafka implementation behind the data product boundary. Zilla Platform continues to serve the same contracts, schemas, and APIs throughout the transition.

From the client perspective, nothing changes.

Zilla Platform Solves the Hard Parts of Migration

Zilla Platform addresses the core failure modes of Kafka migrations by design.

Zilla Platform provides a unified management console where teams can explore Kafka clusters and schemas, design streaming APIs, and productize Kafka topics into real-time data products that carry explicit, versioned contracts.

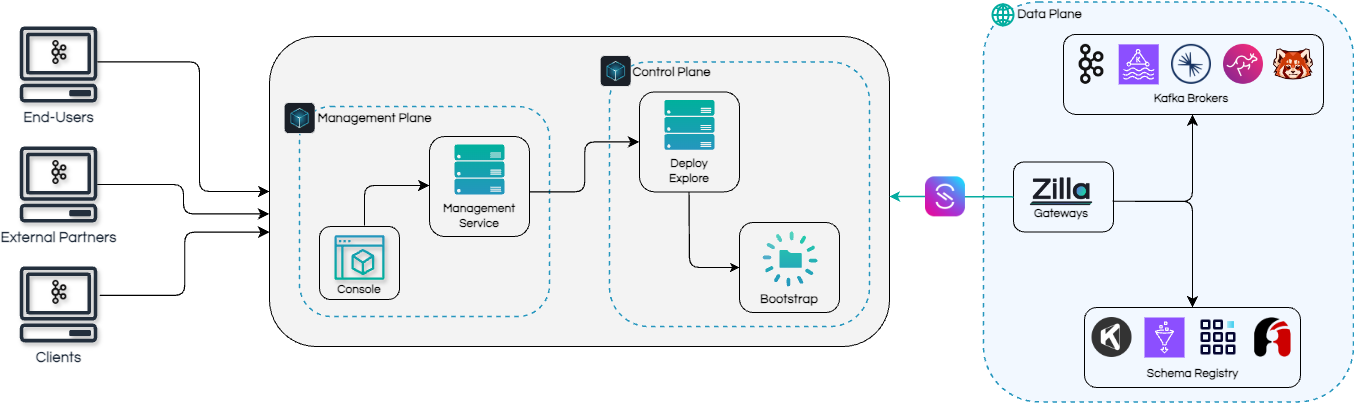

Separation of Planes Makes Migration Predictable

Zilla’s architecture separates concerns across three planes:

- Management Plane: Defines intent and governance, letting platform teams publish and catalogue data products.

- Control Plane: Translates policy and product definitions into runtime configurations.

- Data Plane: Executes governed APIs and data products via Zilla Gateways, mediating access to Kafka.

During a vendor migration, only the data plane wiring changes. The data product contracts remain unchanged.

Zilla Gateways handles client connections, protocol mediation, enforce schemas, and manage security at the edge.

Kafka can be migrated cluster by cluster or vendor by vendor behind the scenes, while clients continue consuming the same data products.

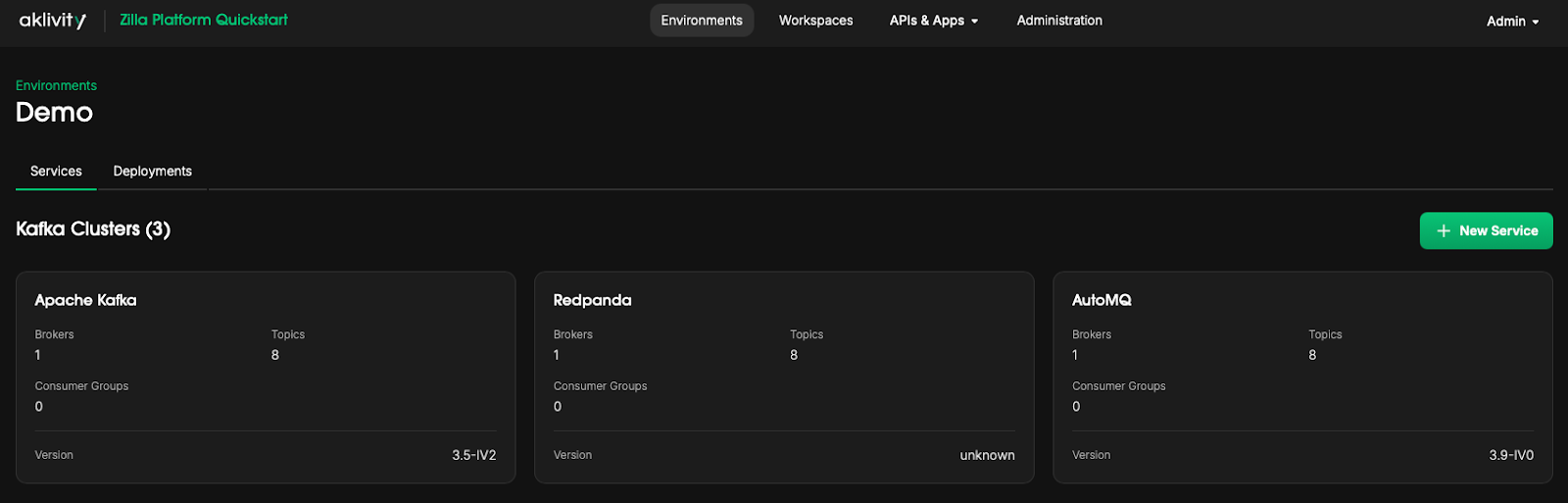

Kafka Migration with Zilla Platform: A Practical Demo

The following demo demonstrates how this model works in practice.

It shows how teams can introduce additional Kafka flavors such as Apache Kafka, AutoMQ, or Redpanda alongside existing deployments without changing client code, security configuration, or schemas.

High-Level Flow

This demo uses Zilla Platform to sit between applications and Kafka, exposing event streams as governed, API-first data products.

Multiple Kafka backends run in parallel behind the platform, allowing migration without exposing infrastructure changes to clients.

- Start with an Apache Kafka cluster

- Add AutoMQ or Redpanda as an additional Kafka backend

- Replicate data between clusters using MirrorMaker

- Extract AsyncAPI contracts from existing Kafka topics

- Productize the streams as a governed data product

- Deploy the data product on Apache Kafka

- Redeploy the same data product on AutoMQ or Redpanda

At every step, producers and consumers continue operating without code changes, configuration updates, or downtime.

This demo is not about tooling convenience. It proves a structural shift.

Kafka vendor migration stops being a risky, organization-wide event and becomes a routine platform operation. Applications, services, and AI agents remain insulated from infrastructure churn.

Ready to try it yourself? Request Access to the Zilla Data Platform

Let’s Get Started!

Reach out for a free trial license or request a demo with one of our data management experts.