The Why & How of Centralized Data Governance in Zilla Across Protocols

Data Governance in Zilla in context of different protocols like HTTP, MQTT & of course, Kafka.

Introduction

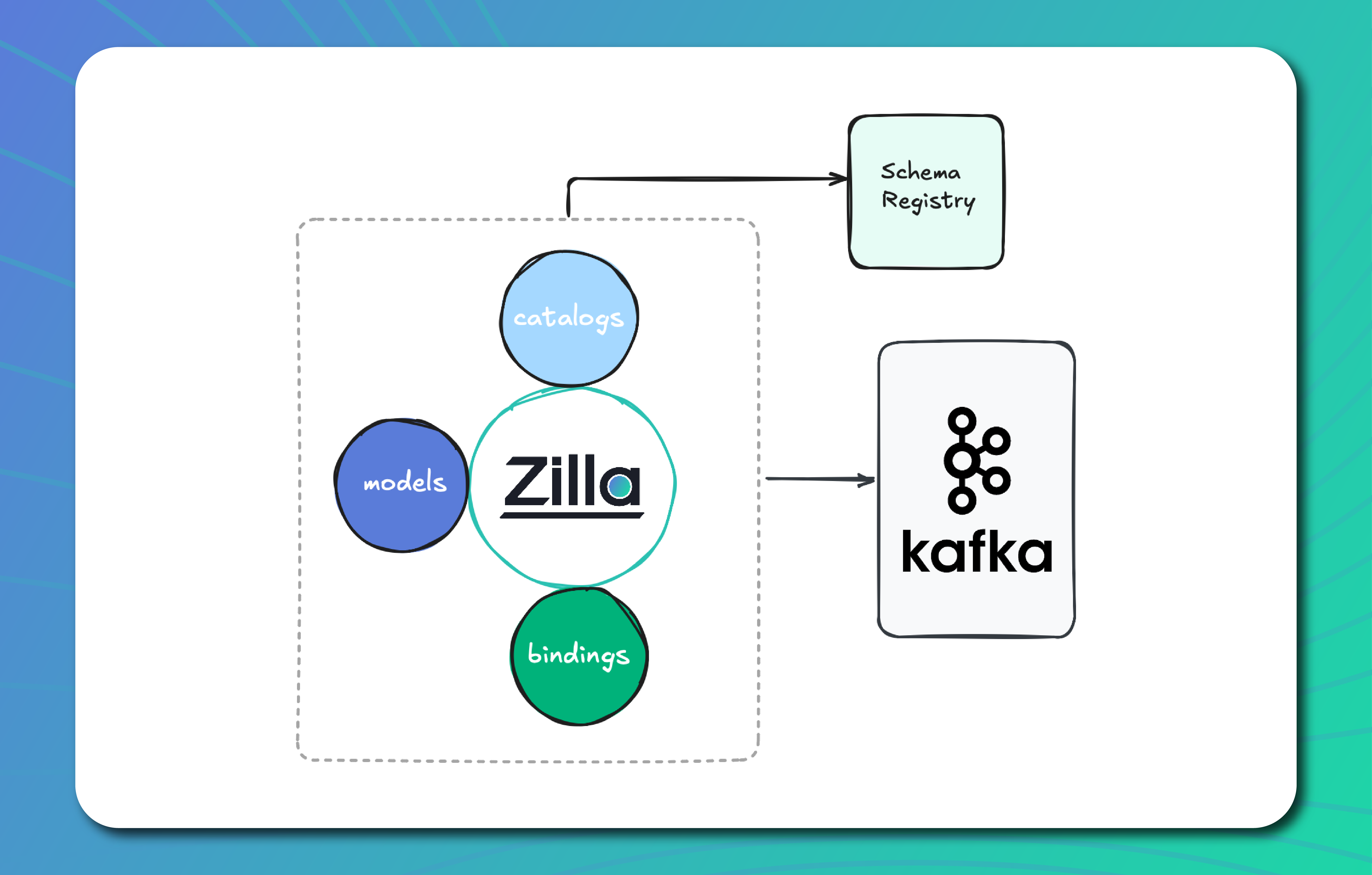

As the title suggests, this blog will delve into Data Governance in Zilla, focusing on various protocols like HTTP, MQTT, and Kafka. Throughout this discussion, I’ll frequently refer to two key terms: Catalog and Model. So, before we dive deeper, it’s only fair to explain what they mean.

Catalog

This refers to the component that communicates with the Schema Registry on behalf of Zilla, handling schema management and validation. One or more Catalogs can be defined in a single Zilla instance and can be used across various bindings(protocols).

Model

This represents all the supported data formats in Zilla and, with the help of the Catalog, ensures that events conform to the configured schema. This configuration is binding-specific, individual models must be defined for each binding(HTTP, MQTT, Kafka).

Both Catalog and Model need to be configured for structured data validation to work in Zilla, ensuring that events are properly validated against the schema and data formats specific to each binding.

The Why

Why do we need Schema Management & Data Validation?

While the need for data consistency, accuracy, and validation may seem obvious, we wanted to explain why we decided to provide first-class support for these features in Zilla in this section.

Given that Zilla is designed to make Apache Kafka feel natural for non-Kafka clients, it only makes sense to offer robust support for message validation. However, being a Multi-Protocol Proxy, we thought why stop there? So we took Schema Management & Data Validation to another level by implementing these amazing features:

- Centralized Catalog configuration that can be used by other supported protocols to enforce validation.

- Support for Various Registries:

-- Apicurio Registry

-- Filesystem

-- Inline

-- Karapace Schema Registry

-- AWS Glue (Zilla+)

-- Confluent Schema Registry (Zilla+) - Support for both Structured & Unstructured Data format.

- Encoding Messages as per configured Catalog, hence making it seamless to migrate between different flavours of Schema Registries.

- Enabled HTTP & MQTT protocols, to utilize these concepts.

Another interesting challenge we set out to solve was converting machine-readable data formats like Avro and Protobuf into human-readable formats like JSON, all while preserving the original format within the Kafka topic. This ensures the data remains accessible and readable, whether fetched directly by a Kafka client or proxied through protocols like HTTP, MQTT, etc.

The How

Now that we’ve covered the theory, let’s dive into the technical details and configure Zilla to see these features in action.

To make it easier for you, we’ve curated a quickstart example complete with the necessary startup scripts: HTTP-Kafka (Karapace Schema Registry)

Let’s dissect the zilla.yaml in this example to understand the configuration.

Step 1: Declare the Catalog

my_catalog:

type: karapace

options:

url: http://karapace-reg:8081

context: defaultStep 2: Configure the Model for the Kafka binding

value:

model: avro

view: json

catalog:

my_catalog:

- strategy: topic

version: latest

And that’s all. You have successfully added all the required configuration to enable validation.

Now, you can run and test this example by following the steps outlined here.

Beyond Kafka: HTTP & MQTT

Similarly, we can extend this validation to HTTP and MQTT bindings:

- HTTP: Validate headers, path parameters, query parameters, and request/response content.

- MQTT: Validate message content and user properties.

This ensures that messages conform to their schema before they reach their destination—across any protocol.

Conclusion

In this post, we’ve explored how Zilla enables centralized schema management and data validation across multiple protocols like HTTP, MQTT, and Kafka. By utilizing Catalogs and Models, Zilla offers a seamless way to ensure that data is validated and encoded as per standard if required before it reaches its destination, regardless of the protocol in use.

With this powerful configuration, Zilla empowers you to manage, validate, and transform data across distributed systems effortlessly, ensuring both flexibility and data consistency.

What’s Next?

Now that you have a solid understanding of how to configure Zilla for data validation, we encourage you to try out zilla-examples and explore different protocols, and see these features in action.

📌 Get Started:

📖 Catalog Documentation

📖 Model Documentation

💬 Join the Zilla Community! Share your experiences and questions on Slack. 🚀

Let’s Get Started!

Reach out for a free trial license or request a demo with one of our data management experts.