The Difference between "Supports Kafka" and Kafka-Native

Zilla is "Kafka-Native", but what does this mean, and more importantly why does this matter? Let's take a closer look.

Introduction

Kafka is a widely used distributed streaming platform that enables the creation of efficient real-time data pipelines and streaming applications. Internally, Kafka utilizes a binary protocol over TCP, which governs how data is exchanged between clients and brokers within Kafka.

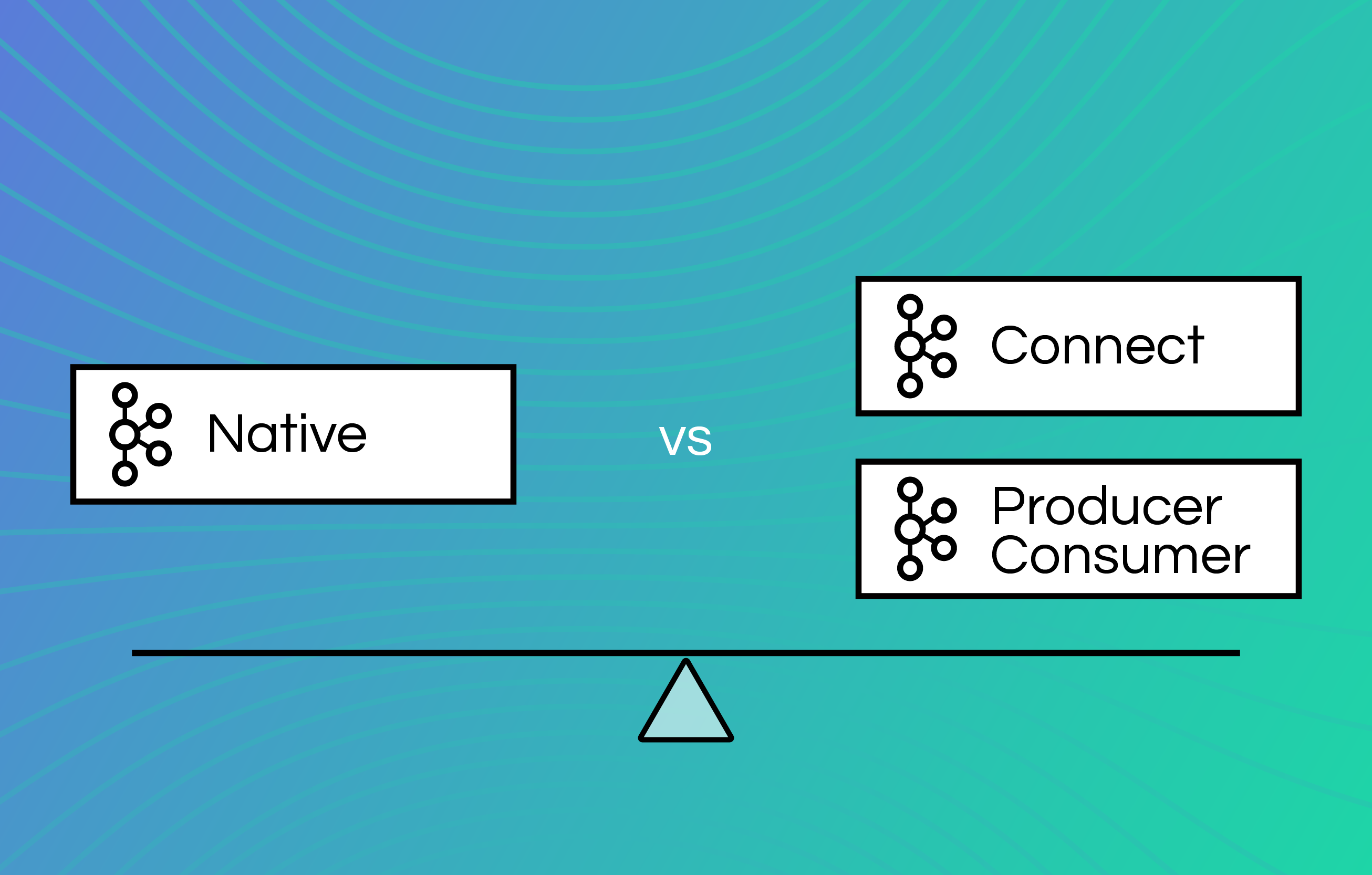

Generally, Kafka offers three connection options for developers to integrate with Kafka and enable real-time data processing between their applications and services:

- Kafka producer and consumer libraries: These libraries offer a flexible way to interact with Kafka as well as read and send data to Kafka topics. The official libraries are written in Java and Scala, but many third-party client libraries are also available for other languages, including Python, Go, and C++.

- Kafka Connect: This component allows you to create reusable connectors between your external applications and Kafka. It's built on top of the producer and consumer libraries and standardizes integrating other data systems with Kafka.

- Direct support for the Kafka wire protocol: This approach allows you to communicate with Kafka brokers directly over the network using the Kafka wire protocol. This method does not require any of the higher-level abstractions provided by the Kafka producer and consumer libraries or Kafka Connect.

These connection options are categorized as either "supporting Kafka" or "Kafka-native." The producer and consumer APIs and Kafka Connect "support Kafka" because they are built on top of Kafka but do not directly connect to the Kafka wire protocol. In contrast, the Kafka wire protocol is "Kafka-native" and provides a low-level interface for developers who require greater control and flexibility over their Kafka deployment.

This article discusses these different Kafka connection options, including how they function, their advantages and disadvantages, and the implications of an application being Kafka-supported versus Kafka-native.

Kafka Producer and Consumer Libraries

The Kafka producer and consumer libraries provide a simple API for sending and receiving messages from Kafka brokers. As mentioned, applications that use the producer and consumer APIs "support Kafka."

The Kafka producer library contains useful functions and classes that detail how to configure your Kafka client as well as format, serialize, and publish records to Kafka topics and topic partitions. It also offers a wide variety of options, such as batch processing and compression.

On the other hand, the Kafka consumer library focuses on retrieving records from a Kafka cluster. It contains useful functions and classes to create a Kafka consumer client, create consumer groups, manage broker requests, and distribute record consumption evenly among different topic partitions.

Within the Kafka ecosystem, producers and consumers play a vital role in the flow of data. When a producer is initialized, it first checks the client ID. After the producer's client ID has been authenticated, the producer selects the topic to which it will publish records.

Topics in Kafka are partitioned to improve scalability and performance. The producer can also opt to define the partition to which each record should be delivered. Alternatively, Kafka can select a partition on its own based on the key of the record.

The following is sample producer code from the Apache Kafka documentation:

Properties props = new Properties();

props.put("bootstrap.servers", "localhost:9092");

props.put("linger.ms", 1);

props.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer");

props.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer");

Producer<String, String> producer = new KafkaProducer<>(props);

for (int i = 0; i < 100; i++)

producer.send(new ProducerRecord<String, String>("my-topic", Integer.toString(i), Integer.toString(i)));

producer.close();

To retrieve data from Kafka, the consumer must first subscribe to one or more topics and the partitions that are associated with those topics. After receiving information about the subject and the partition from the broker, the consumer proceeds to pull records from the Kafka cluster. Within the Kafka ecosystem, consumers can pull records that have been published by producers, which enables data to flow without interruption.

Considerations for Applications Using the Producer and Consumer Libraries

The following are some of the producer and consumer libraries' features and limitations.

Straightforward Method of Producing and Consuming Messages

As the Kafka producer and consumer libraries are abstractions of the core Kafka wire protocol, they can help with a lot of complex low-level Kafka details such as message serialization and compression. They can also simplify advanced features such as message filtering and batching.

This provides you with a more straightforward way to produce and consume messages in Kafka and develop event streaming capabilities in your applications, reducing the risk of errors.

Access to Advanced Kafka Features

Compared to the other connection options, the Kafka libraries offer more control than Kafka Connect but less control than wire protocol access. You can control and tweak more advanced Kafka features compared to Kafka Connect. However, this also requires more code implementation to get started than Kafka Connect.

Lack of Official Packages in Other Languages

The Kafka producer and consumer libraries lack official packages in other languages. The official libraries are written for JVM languages such as Java and Scala, offering limited flexibility for other programming languages and development environments. All other packages offering a Kafka producer and consumer client are owned and maintained by third parties.

Kafka Connect

Kafka Connect is built on top of the Kafka producer and consumer libraries and is focused on creating a framework for building reusable connections and integrations between external systems and Kafka. Like applications that use the producer and consumer libraries, those that use Kafka Connect only "support Kafka."

Kafka Connect, as a tool, facilitates streaming data between Apache Kafka and other systems in a manner that is both scalable and reliable. With Kafka Connect, it's easy to define connectors that move large volumes of data in and out of Kafka, making it available for stream processing with low latency. It can be used to ingest entire databases or collect metrics from application servers into Kafka topics. Moreover, you can also utilize export jobs that deliver data from Kafka topics into secondary storage, query systems, or batch systems for offline analysis.

Kafka Connect simplifies connector development, deployment, and management through its standardized API for Kafka connectors. It can scale up to a large, centrally managed service that supports an entire business, or it can scale down to serve development, testing, and small production deployments. Both distributed and standalone modes are supported. It also provides a REST interface that enables the submission and management of connectors via an easy-to-use REST API. Kafka Connect features automatic offset management and is distributed and scalable by default, leveraging Kafka's existing group management protocol.

Each distinct type of external system needs its own connector through which it can send and receive data through Kafka. Producers need a source connector, while consumers need a sink connector. However, once a connector is created, it can be reused by you and any other person you share it with. Connectors are built and maintained separately from the main Kafka codebase. If you need a specific or custom connector, you must conduct additional research to find it, looking through resources such as Confluent Hub.

Whether you create your own connector or get one from a third party, you'll need to configure it to fit your Kafka cluster and your particular use case. Here's a sample JSON configuration for a connector between a Kafka topic and a Postgres database:

{

"name": "postgres-sink-eth",

"config": {

"connector.class": "io.confluent.connect.jdbc.JdbcSinkConnector",

"tasks.max": "1",

"topics": "crypto_ETH",

"key.converter": "org.apache.kafka.connect.storage.StringConverter",

"value.converter": "org.apache.kafka.connect.json.JsonConverter",

"connection.url": "jdbc:postgresql://questdb:8812/qdb?useSSL=false",

"connection.user": "admin",

"connection.password": "quest",

"key.converter.schemas.enable": "false",

"value.converter.schemas.enable": "true",

"auto.create": "false",

"insert.mode": "insert",

"pk.mode": "none"

}

}

Considerations for Applications Using Kafka Connect

The following are some of Kafka Connect's features and limitations.

Standardized Framework for Integrating Systems with Kafka

The Kafka Connect system offers a standardized framework to efficiently integrate your systems with Kafka and stream data through real-time data pipelines. You can use it to reliably set up and scale pipelines that continually process large data volumes for your specific use case. Apart from easy integration and scalability, Kafka Connect is well-known and community-managed, allowing you to take advantage of a large number of prebuilt connectors for your popular data sources and sinks. This can save you development time and effort.

Lack of Flexibility and Customization

Compared to other connection options, Kafka Connect is less flexible because it is designed to be simple and generic, abstracting away from the native wire protocol of Kafka. This may limit your ability to customize behavior and handle edge cases. When relying on prebuilt or third-party connectors, you are essentially trusting their code to be efficient for your use case. Custom code may be necessary for specific data processing or transformation requirements or to optimize performance. Using third-party connectors can also cause issues in production with inconsistent configuration parameters and vague error messages, which can be a challenge for DevOps and performance optimization. Additionally, some connectors may have technical and licensing limits and related risks in their use.

Increased Chance of Latency Issues

As a distributed system, Kafka Connect can also introduce unwanted latency into the system, which can impact the overall system performance and user experience. This can occur when data is processed through Kafka Connect and needs to be transferred between multiple nodes before it reaches its final destination. Each transfer introduces additional latency, and the processing time for each record can vary depending on factors such as the complexity of the data transformation and the system's load.

Separate Deployment, Management, Monitoring, and Scaling

A Kafka Connect cluster is a separate entity from your main Kafka cluster, which requires separate deployment, management, monitoring, and scaling. This can be both a benefit and a drawback. Separation provides greater flexibility and control over the integration process. It allows for independent scaling of the Kafka Connect cluster, which optimizes performance and reduces resource consumption, minimizing the impact on the main application. However, it also introduces additional complexity and management overhead, requiring additional resources and expertise and making it more difficult to troubleshoot and debug issues.

Kafka Wire Protocol

The Kafka wire protocol provides the lowest-level access and is the only "Kafka-native" way to connect to Kafka. It allows developers to build custom logic for sending and receiving messages as well as communicating directly with Kafka brokers. It enables Kafka's core functionalities without the abstractions of Kafka Connect and the producer and consumer libraries.

The Kafka wire protocol is the foundation of communication between Kafka clients and brokers. After a client establishes a TCP connection with the broker, the protocol handshake takes place. This is an authentication process where the Kafka API versions are shared and security is assured on both ends. The protocol handshake ends with a metadata request containing information about the topics, partitions, and brokers in the cluster, allowing the client to determine where to produce or consume messages. This is achieved through the producer and consumer APIs, respectively.

Though it is the most flexible and powerful way to connect with Kafka, implementing the protocol can be challenging. It requires a deep understanding of the Kafka ecosystem and the various components involved. However, there are solutions that can simplify the implementation of the Kafka wire protocol. For example, Aklivity Zilla provides a managed Kafka wire protocol implementation that allows users to connect to Kafka without having to directly implement the protocol themselves.

Considerations for Applications Using the Kafka Wire Protocol

The following are some of the features and limitations of using the Kafka wire protocol to communicate directly with Kafka.

Kafka-Native Accessibility

Applications using the native Kafka protocol work seamlessly with Kafka and simplify the process of connecting to Kafka brokers, publishing and consuming messages, and performing other common Kafka-related tasks. "Kafka-native" applications are the best solution if you want fine-grained control over your Kafka deployment or have a custom use case that isn't covered by the higher-level abstractions in the other connection options.

The Kafka wire protocol can provide lower latency compared to other methods of connecting to Kafka that involve additional layers of abstraction or middleware. This protocol provides a direct and efficient interface for communication between Kafka clients and brokers, thereby eliminating unnecessary middleware or abstractions. This helps reduce latency and improve performance in Kafka-based applications. While the actual level of latency can be affected by various factors such as the network environment, hardware and software used, and the specifics of the Kafka-based application, the Kafka wire protocol can still be a useful tool for minimizing latency and improving performance in Kafka-based systems.

Increased Complexity

Accessing Kafka via the wire protocol can be more complex and time-consuming than using the higher-level abstractions provided by Kafka Connect or the producer and consumer libraries. It also requires a deep understanding of the Kafka protocol and can involve more manual configuration and setup. However, Aklivity Zilla will handle all of the implementation for you, so you don't have to worry about the complexity and potential errors but can still benefit from the advantages.

Conclusion

In this article, you explored the various connection options available for your Kafka-enabled systems, as well as their benefits and drawbacks.

Using the Kafka protocol and Aklivity Zilla provides several benefits over using Kafka clients and Kafka Connect, such as lower latency and improved performance by eliminating unnecessary middleware or abstractions. Applications with native Kafka protocol support can provide greater accessibility and ease of use, simplifying the process of connecting to Kafka brokers, publishing and consuming messages, and integrating Kafka into existing workflows. By leveraging these tools, you can save time, reduce the risk of errors or performance issues, and improve the overall efficiency of your Kafka-based systems.

Let’s Get Started!

Reach out for a free trial license or request a demo with one of our data management experts.